Creating an AI Music Video Generator using Wan 2.1

Hey everyone!

In this blog, we’ll be creating an AI music video generator using the Wan 2.1 model, which is readily available on PiAPI. You can start using with it right now in our workspace!

Building an AI music video generator requires a variety of AI tools, including a text-to-video generator, an image-to-video converter, LLMs, and an AI song generator.

Luckily for you, all these different AI tools are all readily available on PiAPI!

Creating the AI Music Video Generator

Step 0: Brainstorm ideas for the music video

Before even beginning with the process, we must first brainstorm an idea and script for the video. Luckily, we have the LLM tools for that, such as GPT 4o-mini and Deepseek which you can use in our workspace. I used these tools to generate lyrics for a hip-hop song and even come up with creative concepts for the music video scenes.

Step 1: Audio

If you don't have a song ready, you can start by using Ace-step or Udio, which are also both readily available on PiAPI. For this example, I used Ace-step and input the lyrics generated by ChatGPT 4o. For the prompt, I simply specified the genre as hip-hop, without adding any negative prompts. Here are the two results I got from Ace-step

We are going to use the first example for the music video creation process.

Step 2: Image Generation (optional)

The next step is image generation. While it's optional—since you can directly use text-to-video with models like Wan 2.1—it's highly recommended to use image-to-video generation instead, as it offers significantly better quality. So here are some images we generated using a text-to-image AI model, you can use Flux or Midjourney which are both readily available on PiAPI Workspace.

First we will need a consistent character, we will generate a character for the music video first then use --cref, below is the prompt that we used for the character we generated using Midjourney

Below are three images we've generated, which we’ll be using for the AI music video generator.

Step 3: Video Generation

For the third step, we’ll use Wan 2.1 as our image-to-video generation model, which is also available for you to use in our PiAPI Workspace. We'll use an appropriate prompt along with the image we generated in Midjourney as input.

Prompt: "Animate a slow-motion zoom-in on an artist stepping confidently into a spotlight, walking through a dark room with flashing neon lights. The neon reflections move fluidly as the artist’s footsteps create soft echoes, focusing on his intense expression. The camera moves in a smooth tracking motion to capture his slow but purposeful approach."

Prompt: "Animate a sweeping aerial shot zooming out from the city to reveal the artist floating in a vast sky filled with glowing clouds. The artist's movements should be slow and fluid, interacting with the environment. The surrounding colors should gradually shift as the scene feels otherworldly and surreal"

Prompt: "Animate a close-up of the artist’s face as he stands on the rooftop. The wind should blow through his hair as he gazes at the horizon. The camera should pull back slowly, revealing the vast city around him, as the sun rises. The motion should be gradual, symbolizing a new beginning."

Make sure to create enough videos for the video!

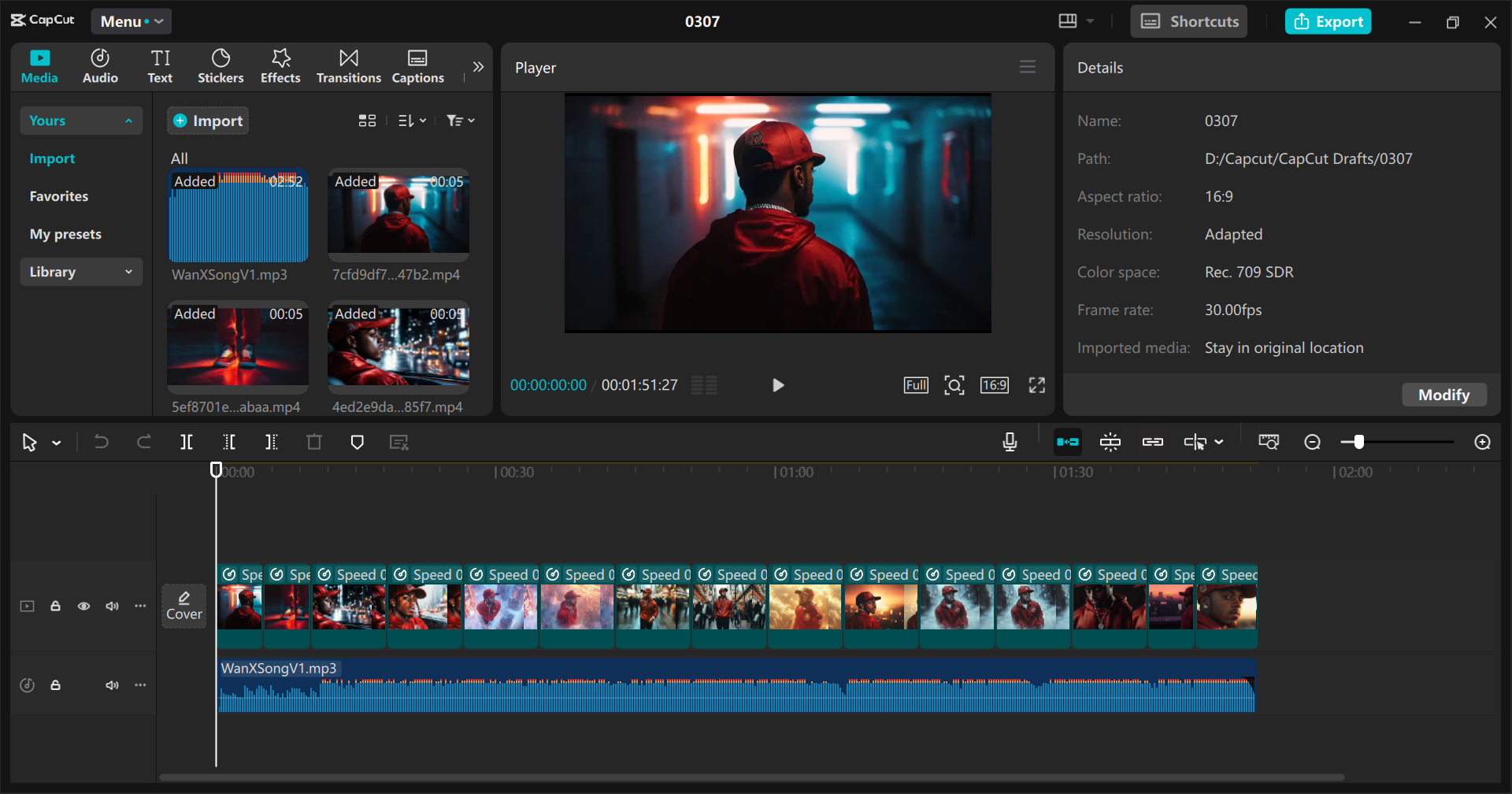

Step 4: Editing

The final step to using AI to create a video for your song involves bringing everything together—combining all the videos you generated with the audio. While you can use any editing software for this process, we’ve chosen to use CapCut. In this stage, you can adjust the speed of each clip, add smooth transitions, and refine the video as needed. It’s the stage to fine-tune your creation and ensure the visuals and audio sync perfectly to create a polished, professional-looking music video.

Step 5: Results

And now, it's time to check out the results generated by a ton of our AIs including Wan 2.1!

Conclusion

We personally think that the results are pretty good, as Wan 2.1 generated some amazing and visually appealing videos! It is the perfect AI video generation tool to create an AI music video maker where you can add audio and video easily. Moreover, given the open-source nature of Wan 2.1 and the impressive results we've seen so far, we're excited to see how the open-source ecosystem will continue to evolve and push the boundaries of AI video creation.

We hope you found this blog useful!

Also, if you are interested in other AI API that PiAPI provides, please check them out!