Luma API & Midjourney API - Exploring the Marvel Multiverse

Introduction

Ever wonder how to breathe new life into your Midjourney creations?

By pairing our Midjourney API with our Luma's Dream Machine API, you can now immediately turn your AI generated images into animated videos!

In this blog, we will explore some examples of combining these two AI APIs in action, creating dynamic videos as per the most popular trends!

Example 1: Tom Cruise Iron Man

In our first example, we’ll explore a fan-favorite scenario: the Iron Man character portrayed by Tom Cruise - and we will be using the AI APIs to generate a short scene of this fictional character.

In terms of workflows, we will be testing three different workflows for this example, to help us better understand the steps involved, and the respective output qualities.

Workflow 1: Midjourney to Luma

For this workflow, we will first generate the image for this fictional character using Midjourney API, and then we will use that image along with another prompt to generate the video using the Luma API. Below is the image that we have generated using Midjourney API, with its prompt in its description (all the prompts used in this blog are edited by GPT). The image generated by Midjourney API will then be put into Luma API

Now that we have the image generated by Midjourney API, we will be inserting that image alongside a new prompt into Dream Machine API, and below is the video generated.

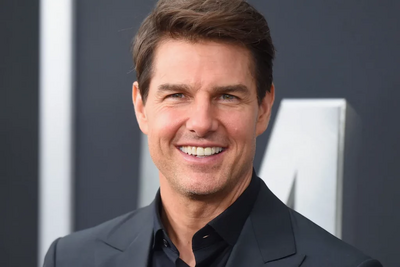

Workflow 2: Real Image to Luma

For the second workflow, we have found a real image of Tom Cruise on the internet (see below), and we will use it along with a new prompt to generate the video using the Dream Machine API.

For the prompt in this workflow, we will have to specify the "man in a red and yellow Iron Man suit" because the Tom Cruise image that we found obviously doesn't have the Iron Man suit. In contrast, Workflow 1's image includes an Iron Man suit since the image is generated by Midjourney, thus the omission in its prompt.

Below is the video generated using the prompt (shown under the video GIF) and the input image found online.

Workflow 3: Just using Luma

And for the final workflow, we will not use any image as an input to Luma, but only use a relevant prompt to generate the video using the API.

The main difference between the prompts for this workflow and Workflow 1 is that in Workflow 1, the image already features Tom Cruise in an Iron Man suit, so we don't need to specify the "Iron Man suit" part. Whereas this workflow has no initial image, thus needing the specification in the prompt.

And below is the video generated by Luma API, using only the prompt under the GIF.

Takeaways

By comparing the results above, we think that the result from Workflow 1 (Midjourney to Luma) is the most realistic and detailed output.

In Workflow 2 (Real image to Luma), we refined the prompt to specify Tom Cruise wearing a red and yellow Iron Man suit, as he obviously wasn't wearing one in the real image. However, despite the prompt, the final result did not include the suit.

In Workflow 3 (Just using Luma), we also refined the prompt to specify Tom Cruise wearing a red and yellow Iron Man suit, as no initial image was used. The result is not as high resolution as that from Workflow 1. We think this is because the high quality image from Midjourney "primed" the Luma model to generate higher-quality video.

Thus, if you want the best possible output, we recommend you to use Luma Dream Machine API in conjunction with the image from Midjourney.

Example 2: Henry Cavill Wolverine

In the second example, we’ll revisit a concept fans of the Deadpool and Wolverine movie may be familiar with, the fictional character Wolverine played by Henry Cavill.

Although many may be disappointed by his brief cameo in the movie, Luma API and Midjourney API will allow us to explore an alternate reality where Henry Cavill took on the iconic role of Wolverine, instead of Hugh Jackman.

First, we used Midjourney API to generate an image of Henry Cavill Wolverine; you can see below for the output image and the prompt.

Then, we input the image generated into Luma API with a new prompt. You can see below for the output GIF from Luma and the prompt used. The output is quite dynamic as you can see.

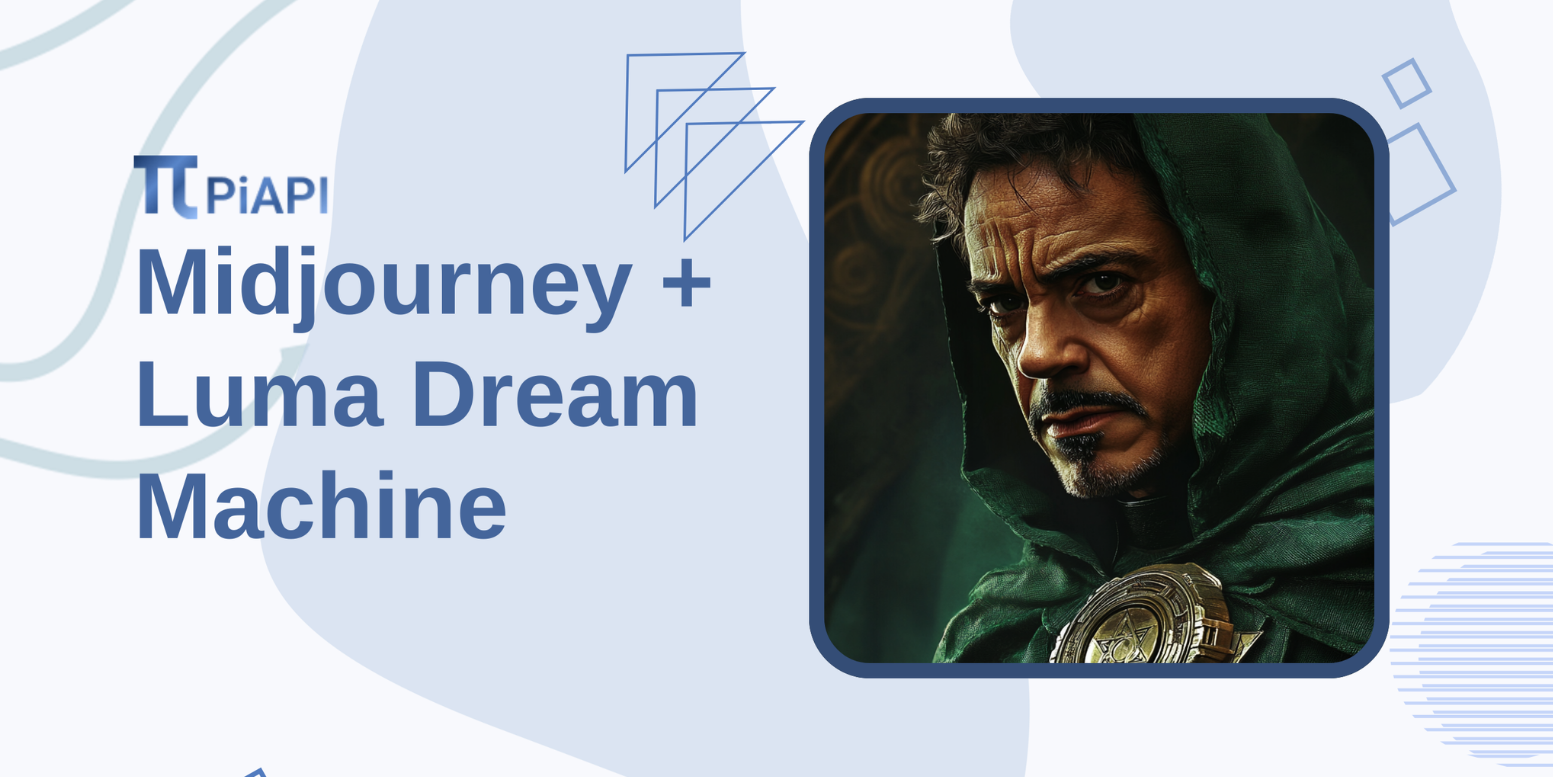

Example 3 Robert Downey Jr Dr Doom

In the final example, we'll explore the highly anticipated concept of the fictional character Dr. Doom, portrayed by Robert Downey Jr (RDJ).

RDJ Doom takes a new direction for the Marvel universe, and by using the APIs, you can visualize this concept before Marvel releases its first glimpse of Robert Downey Jr. as Dr. Doom.

As with the previous examples, we will first need to generate an image of RDJ Doom using Midjourney API with the prompt provided under the output image.

The image generated will then be used in the Luma API, along with a prompt shown below the output GIF. We personally like the intense look on the character and the clockwork details as the camera pans to the left.

Conclusion

Now it's easy to see why combining our Luma Dream Machine API with our Midjourney API is the superior choice for great animated visuals. The level of detail achieved by feeding Midjourney images into Luma is quite high, and Luma does a very good job with animating the input, resulting in relatively good-quality output videos.

We hope you've enjoyed our little experiment and can try a few other ideas yourself!

If you're interested in our other AI APIs, feel free to check them out!

Happy creating!