Kling 1.6 Model through Kling API

Hi developers!

On December 19th 2024, Kuaishou, the team behind Kling AI, has just released its new model, Kling 1.6, making the announcement in a post on X! And here at PiAPI, we already have the Kling 1.6 API available for our users!

With the release of Kling 1.6, Kuaishou has claimed that the new model has improved prompt adherence, more consistent and dynamic results, and a 195% overall improvement rate when compared with the Kling 1.5 model. As Kling API (before the update) is already one of the top AI video generation tools like Pika API or Luma Labs Dream Machine API, the new improvement can be a major update enhancing img2vid prompting for AI movie creation.

However, at PiAPI, we have done our own versions 1.5 and 1.6 comparisons for both text-to-video and image-to-video, with the evaluation frameworks used shown below and the subsequent results shown in the blog.

Evaluation framework

Text-to-video

For the text-to-video comparions between Kling 1.6 API and Kling 1.5 API we are going to be using the comprehensive text-to-video evaluation from Labelbox plus "text adherence". This is the same framework we had used in our previous blog comparing Luma Dream Machines 1.0 vs 1.5 namely:

- • Prompt Adherence

- • Text Adherence

- • Video Realism

- • Artifacts

Image-to-video

For the image-to-video comparisons between Kling 1.6 API and Kling 1.5 API we are going to be using the AIGCBench (Artificial Intelligence Generated Content Bench).This is the same framework we used in our previous blog about using the Kling Motion Brush through Kling API namely:

- • Control-Video Alignment (Prompt adherence)

- • Motion Affects

- • Temporal Consistency

- • Video Quality

Text-to-video Comparison

For the text-to-video comparisons between Kling 1.6 API and Kling 1.5 API, we are going to simply put the exact same prompts into both models then directly compare the resulting videos side by side.

Example 1

Both videos have some issues with adhering to the prompt. In the video generated by the Kling 1.5 API, the golden star is not placed on top of the Christmas tree. Meanwhile, in the video generated by the Kling 1.6 API, the camera fails to rotate around the tree as expected.

While both videos are quite realistic, the Kling 1.6 video loses some points due to the snow falling indoors, which doesn't follow real-world physics. Apart from that, neither video exhibits any noticeable artifacts.

Overall, for this particular example, Kling 1.5 performs slightly better than Kling 1.6.

Example 2

Both videos have a woman running on a track field and holding a bottle of water, but Kling 1.6 API does not have the woman drinking from the water bottle, whereas Kling 1.5 follows the prompt by including this detail. Both models exhibit poor text adherence, as the words on the woman's shirt in both videos do not resemble "NFL" in any way. Despite this, both videos are realistic and free of visible artifacts.

Overall, Kling AI 1.5 API performs better in adhering to the prompt compared to Kling AI 1.6 API.

Example 3

Both videos have a cartoon-style bird sneezing into something, but they differ in how they follow the prompt. Kling 1.6 adheres more closely to the prompt by having the bird sneeze into a tissue, while Kling 1.5 shows the bird sneezing into a pink towel. As for text adherence, neither video fully follows the prompt as both videos do not display the words "bird flu medicine" on the billboards behind the birds.

In terms of animation style, both videos look good within their respective cartoon animation styles. However, there are some visible artifacts in each. In the Kling 1.6 AI API video, the hand holding the tissue appears too humanoid, resembling a human hand rather than a bird wing. Additionally, the fingers seem to pass through the tissue. In the Kling 1.5 API video, a flickering artifact briefly appears in the bottom left corner of the screen during the middle of the video.

Overall, both videos are similar in quality; with a little bit more improvement, Kling 1.6 API could even be a good animated movie generator!

Image-to-video Comparison

For the image-to-video comparisons between Kling 1.6 API and Kling 1.5 API, we will first generate an image using Midjourney API. This image, along with an identical prompt, will then be used as input for both models.

Example 1

Below is the image generated using Midjourney API, which was then used as input for Kling API in this example.

Now that we have an image generated by Midjourney API, we will be inserting that image alongside a new prompt into both Kling 1.5 API and Kling 1.6 API, and below are the videos generated

The videos generated by both Kling 1.5 API and Kling 1.6 API closely follow the prompt, having Superman flying with the camera zooming in on his face. Both videos exhibit a similar level of dynamism, particularly with the capes flowing in the background, and maintain consistent motion throughout. There are no noticeable artifacts in either video.

Overall, the quality of both videos is similar.

Example 2

Below is the image generated using Midjourney API, which was then used as input for Kling AI API in this example.

Now that we have an image generated by Midjourney API, we will be inserting that image alongside a new prompt into both Kling 1.5 AI API and Kling 1.6 AI API, and below are the videos generated

Kling 1.6 AI API adheres more closely with the prompt than Kling 1.5 AI API. In the video generated by Kling 1.6, the cat jumps directly toward the camera, while in the video created by Kling 1.5, the cat jumps to the left. Both videos are dynamic, but the Kling 1.6 video is a lot more realistic. The expression on Steve Harvey's face looks more natural, and both his movements and the cat's movements appear more natural. Additionally, the Kling 1.6 video is free of visible artifacts, unlike the Kling 1.5 video, where a noticeable artifact appears after the cat jumps from Steve Harvey's hands—specifically, a small, cat-like creature that seems to appear out of nowhere, which is clearly unrealistic.

Overall, the video produced by Kling 1.6 is far better in terms of quality when compared to the one generated by Kling 1.5.

Example 3

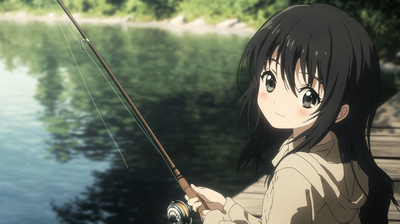

Below is the image generated using Midjourney API, which was then used as input for Kling API in this example.

Now that we have an image generated by Midjourney API, which looks straight out of an anime art ai generator library, we will be inserting that image alongside a new prompt into both Kling 1.5 API and Kling 1.6 API, and below are the videos generated.

Neither video fully adheres to the prompt. In the video generated by Kling 1.5 API, the girl fails to pump her fist in the air after catching the fish. Meanwhile, in the Kling 1.6 API video, there is no fish at all.

Both videos feature dynamic motion effects. The lake's water in the background moves realistically, and the girl moves dynamically in both clips. However, the animation in the Kling 1.6 video appears much smoother overall. That said, in the Kling 1.5 video there is a very visible artifact the girl's face undergoes unnatural morphing.

Overall, Kling 1.6 is slightly better than Kling 1.5 for this example.

Conclusion

Based on the six examples provided, it's clear to see that there are minimal differences between Kling 1.5 API and Kling 1.6 API for text-to-video generation. In fact, considering the three examples we’ve analyzed, you could even argue that Kling 1.5 outperforms Kling 1.6 in this area.

However, when it comes to image-to-video generation, the Kling 1.6 API shows significant improvements, particularly in terms of movement quality and how well the model follows prompts related to movement. With that being said, we still don't believe that it is a 195% improvement when compared to Kling 1.5 like Kuaishou claimed, but these improved aspects would make the tool very valuable for workflows such as creating a motion meme.

With this major leap, we can see that Kling 1.6 is on par or even exceeds other tools on the market, such as the popular Sora video generator. It can reimagine an image to video using AI very well, it can be a tool for artwork creation (ex. if developers want to create an AI frame generator), and it can even be a great tool for lip sync AI.

We hope that you found our comparison useful! And if you are interested check out our other generative AI APIs from PiAPI!